We can't find the internet

Attempting to reconnect

Something went wrong!

Hang in there while we get back on track

I build revenue-driven products with AI—full-stack in Elixir, Go, Svelte & Rust (plus Python when it adds value).

I help small businesses and e-commerce teams automate operations, reduce manual work, and sell more. From booking engines and supplier portals to chat agents and analytics, I deliver reliable backends (Elixir/Go), fast UIs (Svelte), and high-performance components (Rust). Where it makes sense, I integrate private, secure AI—tuned to your data—to speed up responses and increase conversions.

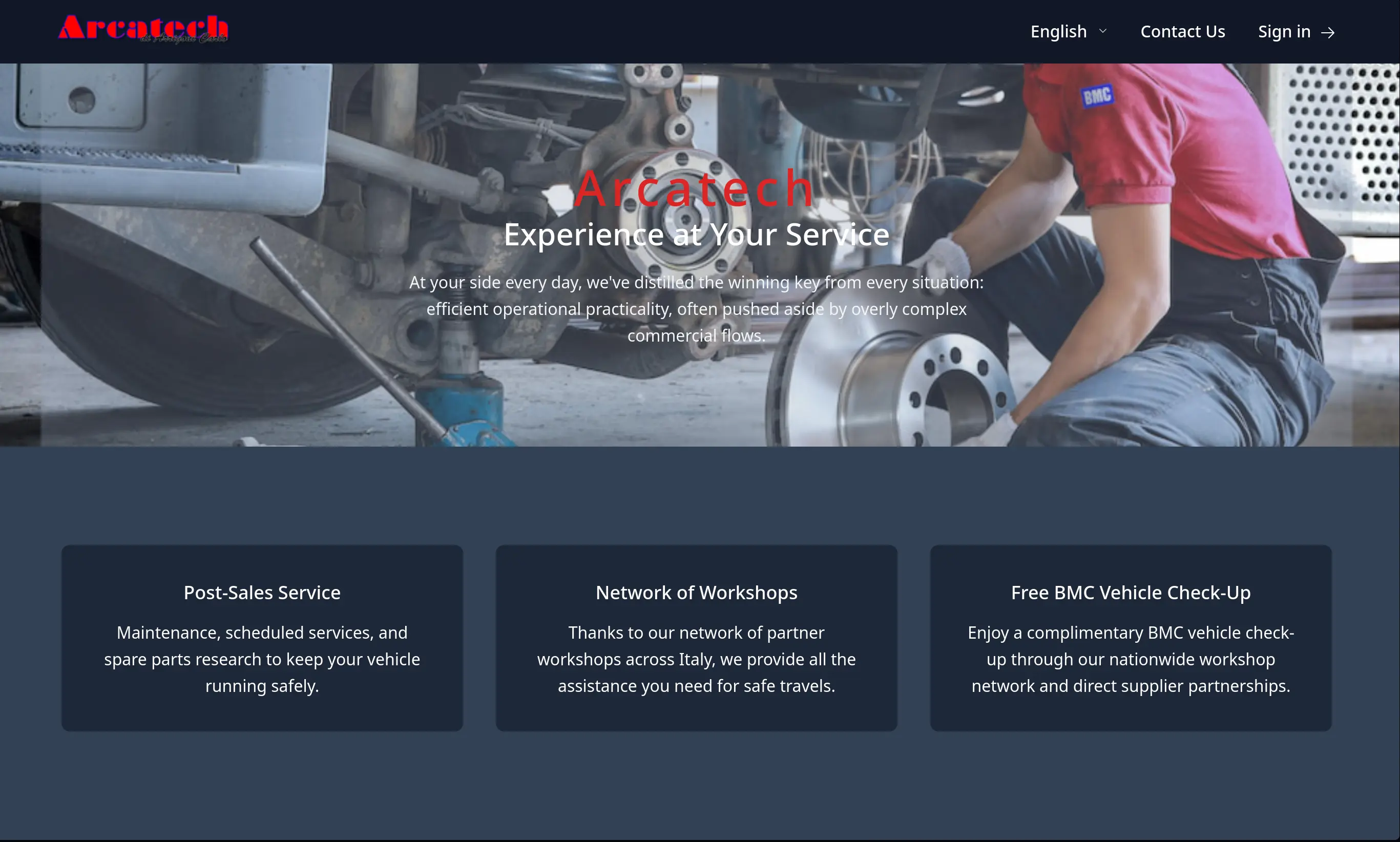

Arcatech

Arcatech is an online management system for creating catalogs of mechanical parts, taking orders, and orchestrating the entire customer–supplier workflow. An AI chat agent requests availability and prices for parts that aren’t in stock, contacts supplier reps via Email or WhatsApp, and updates the database in real time. Customers can send photos and details even if they don’t know the exact name or reference number—the system identifies the part and organizes it directly in the order. Built end-to-end with Elixir/Phoenix LiveView in just 4 weeks.

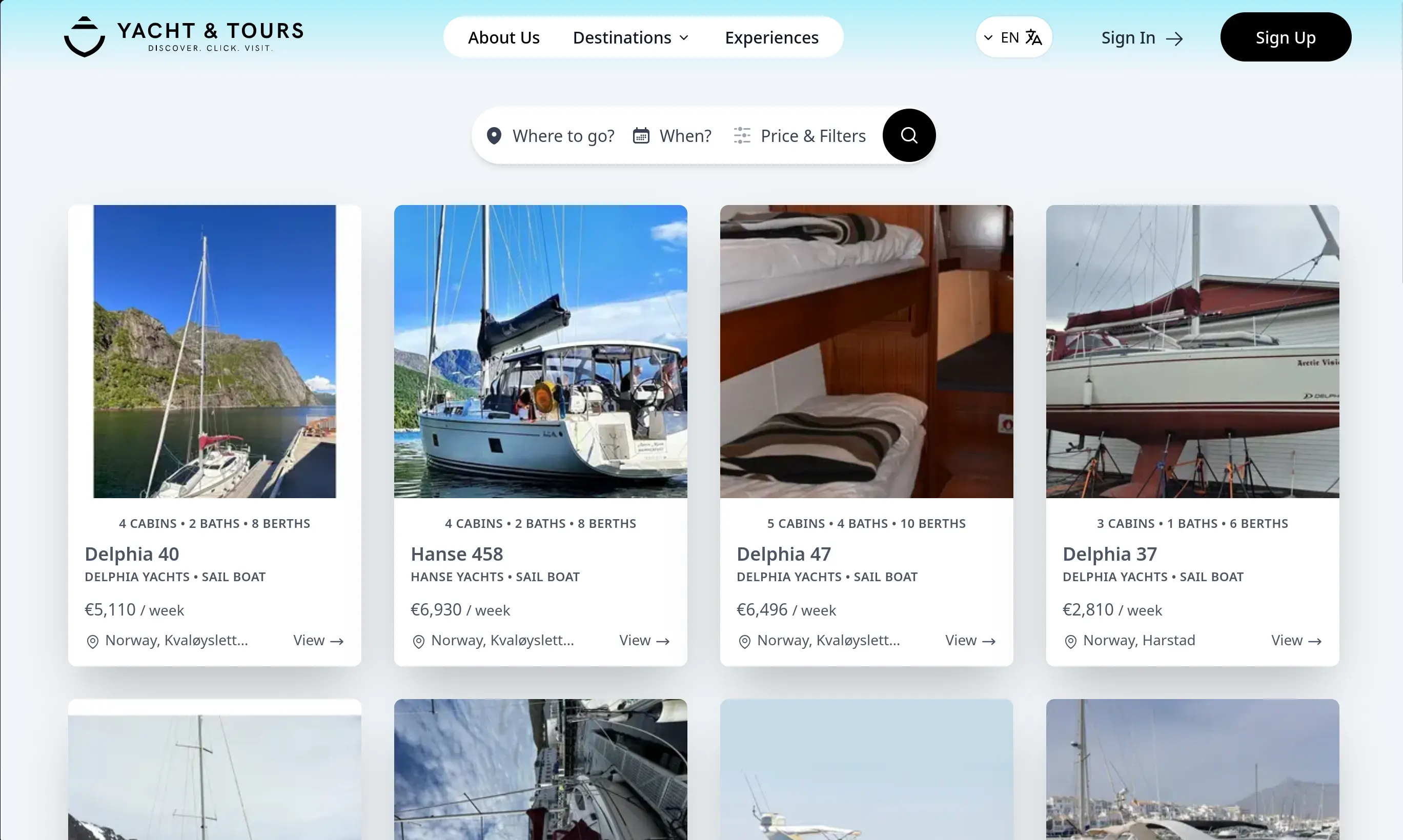

Yacht & Tours

Yacht & Tours is a boat-booking platform with advanced price search and filters, elegant calendar and pricing management, guest–host chat, and real-time notifications for bookings and availability. It supports Stripe payments and electronic invoicing, plus a lightweight admin dashboard for quotes and users, with synchronization to the MMK boat-booking system.

Built with Elixir/Phoenix LiveView and a few Svelte components in ~3 months. The architecture is scalable and distributed—ideal for seasonal traffic spikes and growth.

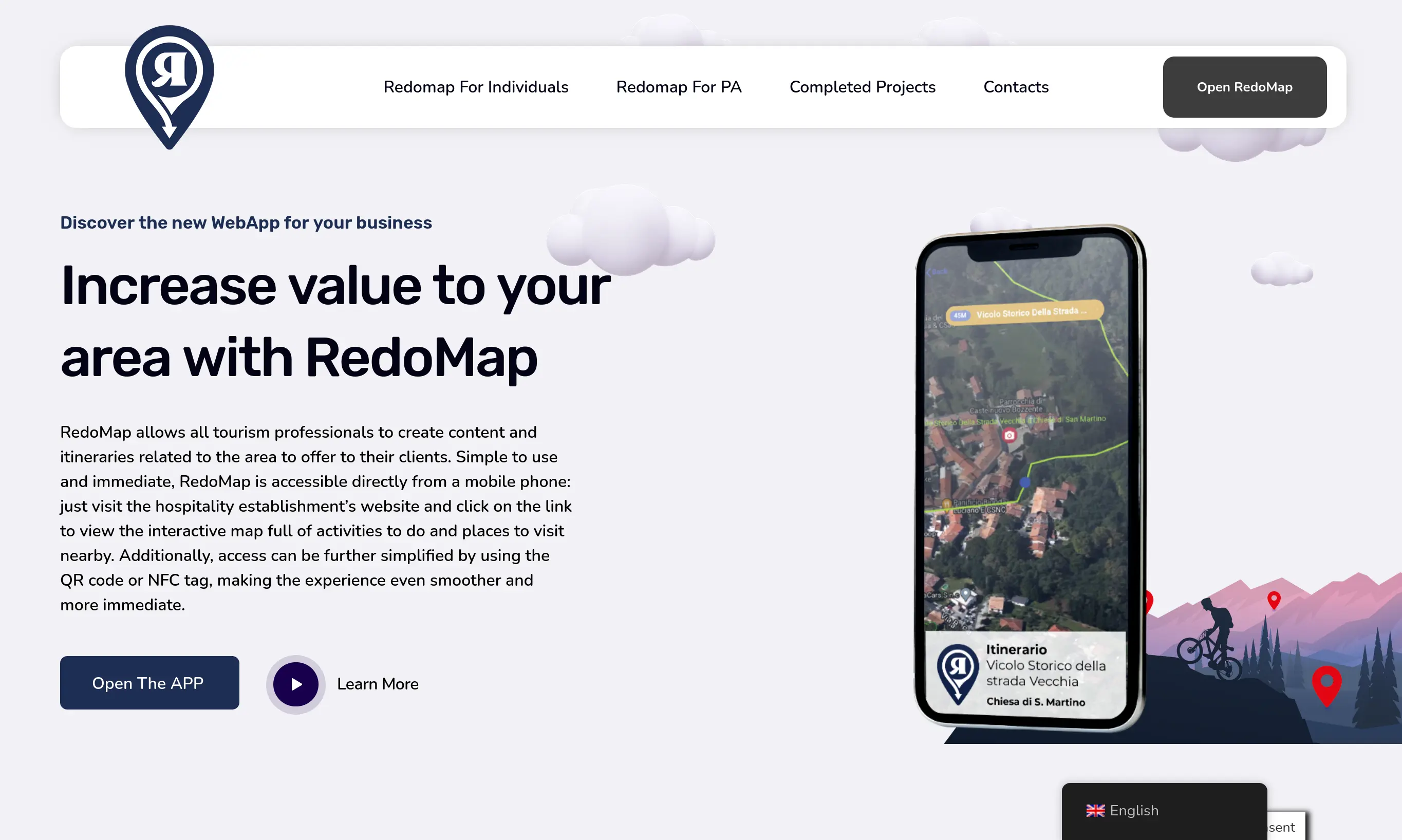

RedoMap

Redomap is a Svelte-based web app that drops into any website to offer navigator-style exploration—no installs, no registration. Scan a QR code and go. It includes paid map sales, an interactive dashboard to build maps, and audio guides delivered directly in the browser.

AI assists with content creation and review, while territory analytics reveal what truly interests visitors. Hotels and points of interest use Redomap to offer premium maps and a unified audio-guide + navigation experience. Developed over multiple versions, live for 5+ years, and trusted by a growing base of clients.

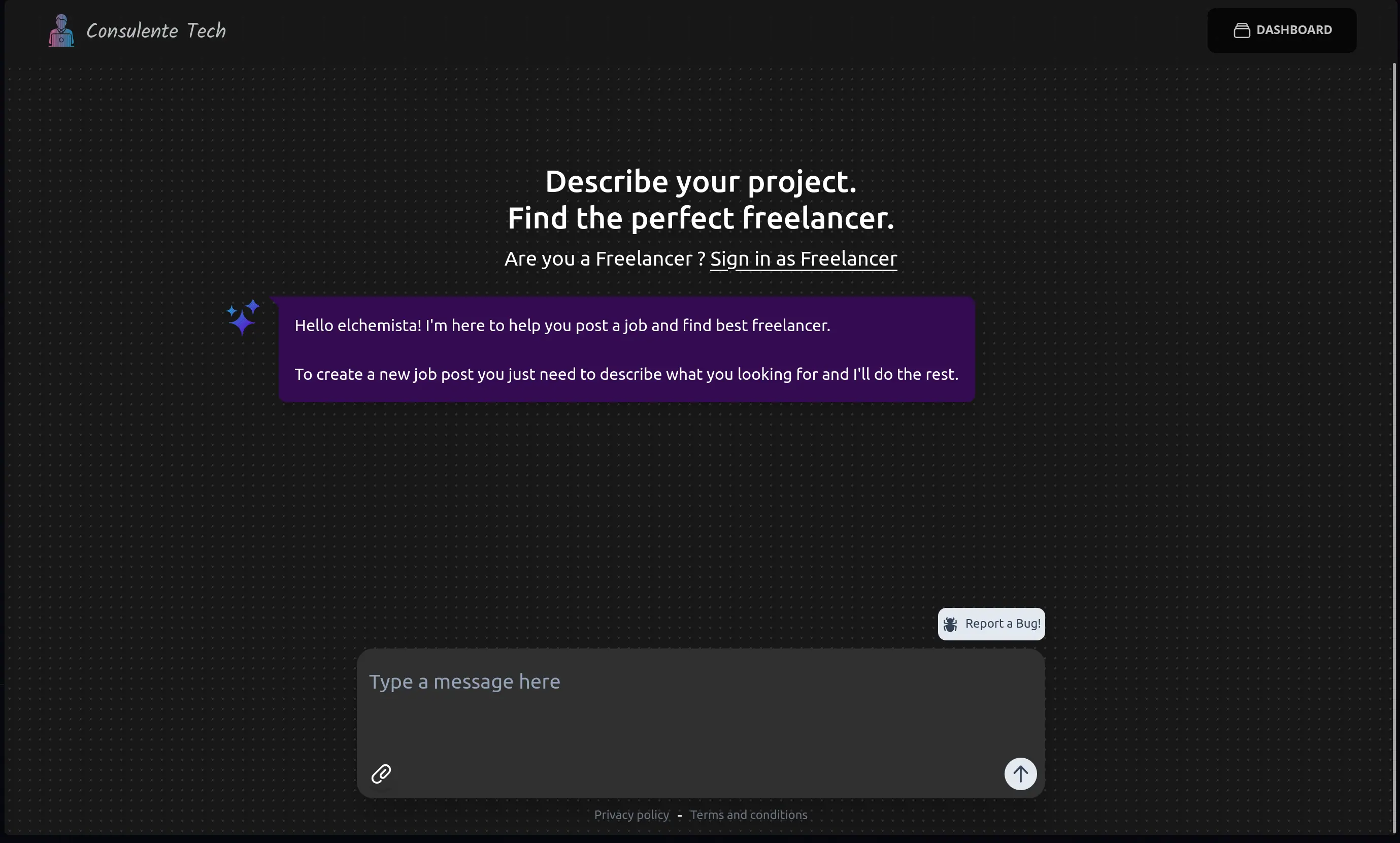

Consulente

Consulente Tech (beta) streamlines job posts and proposals with AI and helps clients connect with the right freelancers. Clients publish needs, receive smart, structured quotes, and chat directly, while freelancers can team up as organizations to showcase services. The first version is live and already gathering valuable feedback for continuous improvement.

Yuriy Zhar

Passionate web developer. Love Elixir/Erlang, Go, Deno, Svelte. Interested in ML, LLM, astronomy, philosophy.

Enjoy traveling and napping.

This website began as my personal blog, but after building it, I realized I enjoyed the process of development more than writing posts. I kept adding new features instead of content, so I decided to shift focus to sharing ideas and projects on this page instead.

With the rise of AI, I started building more RAG (Retrieval-Augmented Generation) applications and AI tools, so most of my projects now center on this area. I primarily work with Elixir and Go, and occasionally use Python when fine-tuning models is needed.